Workstation Research Provides New Insights

Fig. 7: An engineer using a VR HMD inside a CAVE. (Join Gray Construction)

Latest News

February 15, 2018

By Jon Peddie

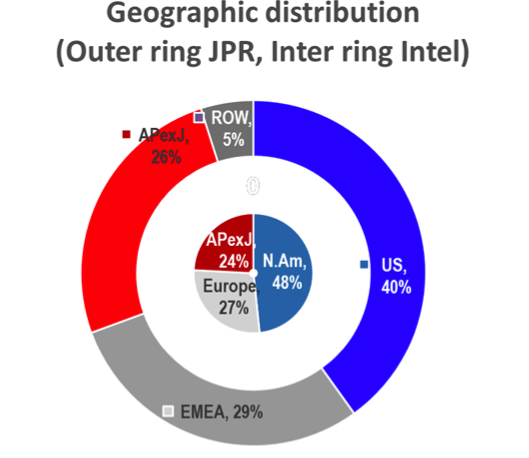

Intel commissioned a third-party research firm to gain new workstation insights through a blind research survey. There were over 2,500 responses from end users, IT decision makers (ITDMs) and managers at organizations across the top workstation verticals. The research firm performed qualitative interviews with 34 companies representing key workstation segments: Architecture, Engineering and Construction (AEC); Manufacturing; Media and Entertainment (M&E); Healthcare; Oil & Gas; and Financial Services. Quantitative responses were received from 1,482 technical decision makers (ITDMs) and 1,046 business decision makers (BDMs), 2,528 in total. Geographically, the responses broke down into 1,222 from North America, 695 from Europe, and 611 from Asia. The usage across key verticals and workloads of the most frequently used apps were evenly distributed with about 300 in each category, and twice that for Manufacturing.

Fig. 1: Intel’s survey data correlated well with JPR’s geographic data.

Fig. 1: Intel’s survey data correlated well with JPR’s geographic data.Let’s dig into key insights from the research, and support it with results from some previous research conducted by Jon Peddie Research.

What is a Workstation?

The term workstation is commonly used in the industry, and most of the BDMs also use the term “High Performance Computers,” which to them means a machine that can run multiple programs on multiple monitors. The ITDMs tends to describe a workstation more in terms solving their needs, saying things like a workstation should have hyper-threading, multi-core, and abundance of memory, or in terms of specifics such as: Animators need dual-socket Intel Xeon processors operating at 3.5 GHz or higher, a NVIDIA top-end add-in board (AIB) an SSD, and as much memory as you can afford, at least 128 GB.

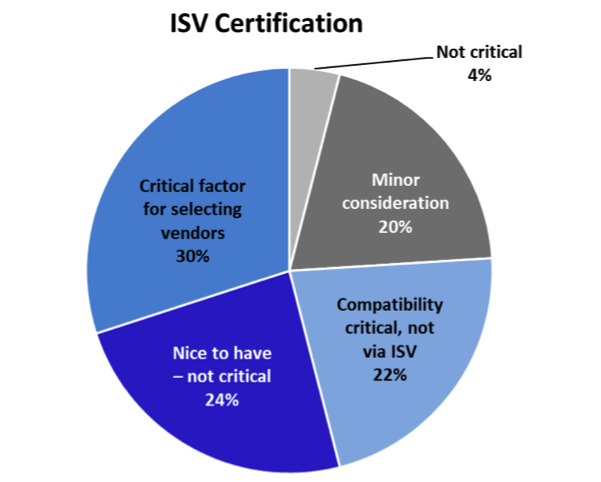

The Value of Application Certification

One of the distinguishing features of a workstation is the assurance it will run the most frequently used apps as they are designed, commonly referred to as “application certification.” During the development process, workstation suppliers work closely with independent software vendors (ISVs) to ensure the application’s special features and functionality are fully supported. ISVs like Autodesk, Bentley, Siemens, PTC and others work with the add-in board (AIB) suppliers like AMD, NVIDIA and their partners. They also work closely with CPU suppliers like Intel and AMD to fine-tune their software drivers for three operating systems (Windows, Linux and iOS) to make sure special features and functions in the applications fully exploit all of the acceleration capabilities in the hardware, which are (hopefully) exposed through the driver.

In addition to three operating systems, there are three (or four) application program interface (API) standards that the workstation suppliers have to support—OpenGL being the most important, and, in some cases, DirectX. Two new APIs—Vulkan and Metal—are also being added. Not all the ISVs offer support for all the combinations, but nonetheless, that suggests there can be up to 12 combinations of OS and API that must to be tested across at least a half dozen AIBs. Add in half a dozen CPUs, and that expands the potential number of certifications to 432 possibilities. They don’t test for all of these scenarios, but testing for a dozen or two is not unusual.

And yet, with all that effort to ensure the maximum, most reliable performance, the surveyed end users don’t fully appreciate or understand the certification linkage and importance between the processors (CPU and/or AIB), software driver, API, OS and application. If any one of those components in that chain fails, the system stops, and it burns time and money debugging and repairing it.

Fig. 2: ISV certification is more critical to technical decision maker as they configure and repair workstations. Certification is much less important in China than North America.

Fig. 2: ISV certification is more critical to technical decision maker as they configure and repair workstations. Certification is much less important in China than North America.The time and money aspect of production engineering design work is the backbone of a workstation: “rock solid and bullet-proof,” as they say in the industry. So, given how important “failsafe operation” in a workstation is, you’d think the users would have a deeper understanding —if not appreciation for the value of it. In the survey, certification doesn’t appear to be part of the evaluation criteria by the managers on their purchasing checklist, and yet, it ranks No. 1 in the “Decision Tree” chart in the summary section. However, the real value is to professional IT managers who get a guarantee that if they buy hardware that is certified with the application they are using, it will just work.

The value of certification is in the enterprise level support, which ensures not only a good release but the ability to support for multiple years. NVIDIA, for example, tests a majority of the combinations and maintains builds/regression testing across multiple operating systems, versions of OSes and multiple versions of the ISV application. Further, they’ll test and support multiple ISV applications running together on the same system. This cross-testing helps ensure the best supported workflow and not just the best supported application.

The Value of Error Correcting Code (ECC)

The highly dense, high-speed random access memory (RAM) used in today’s modern computers is a miracle of technology, but it’s not fool-proof. Those microscopic memory cells can miss a signal, get confused by cosmic rays, or be thrown off by temperature and or voltage surges, among other things. All of these errors only become more common as you increase the amount of memory in a system. Knowing the inherent fragility of RAM, circuit and system designers have developed schemes to catch, and sometimes correct for such failures. Approaches have been developed to deal with unwanted bit-flips, including immunity-aware programming, RAM parity memory and ECC memory. Of the lot, ECC is a more effective version that also corrects for multiple bit errors, including the loss of an entire memory chip.

Fig. 3: An ECC memory module looks the same as a regular DRAM module.

Fig. 3: An ECC memory module looks the same as a regular DRAM module.Some of the newer, high-speed versions come with a heat-sink.

ECC memory was introduced in the late ’70s and early ’80s. An ECC-capable memory controller can detect and correct errors of a single bit per 64-bit “word” (the unit of bus transfer) and detect (but not correct) errors of two bits per 64-bit word.

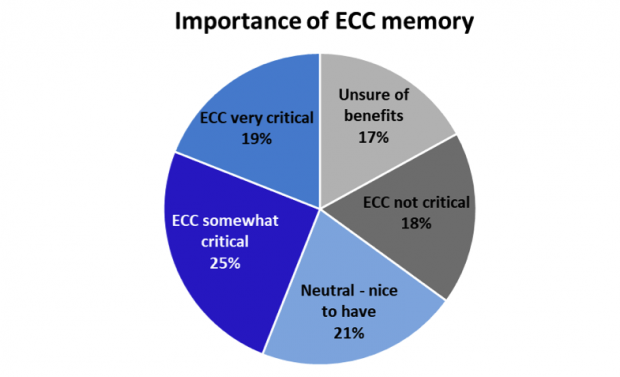

Technical decision makers responding to the survey said they saw minor advantages to ECC overall, but felt it was vital to CPU-heavy workloads.

Fig. 4: More awareness and importance was placed on ECC in China than the North American respondents, and ECC seen as less critical in Europe—specifically France.

Fig. 4: More awareness and importance was placed on ECC in China than the North American respondents, and ECC seen as less critical in Europe—specifically France.Some respondents felt ECC was critical for AEC users doing rendering and senior designers/engineers in M&E, Health/Bio and Energy/Oil & Gas. Those who have used systems with and without ECC, reported they consider ECC a critical factor for improved reliability and productivity. Others think it depends on the workload of the end user. When users move to an Intel Xeon processor based workstation, they have ECC.

Where Does the Performance Come From?

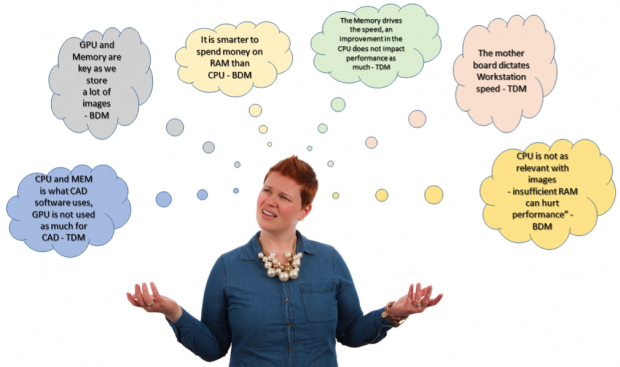

Technical decision makers and business decision makers who responded to the survey are confused about which component contributes the most to great and or poor performance.

Business Decision Maker (BDM) and Technical Decision Maker (ITDM) respondents are confused about which component contributes to both great and poor performance.

Business Decision Maker (BDM) and Technical Decision Maker (ITDM) respondents are confused about which component contributes to both great and poor performance.The technical and business decision makers who responded to the survey aren’t computer experts any more than they have to be to get their primary job done, so they can be excused if they don’t know where the bottlenecks are in a system. Those bottlenecks shift over time from memory, to software, to CPU, etc. This does, however, indicate that the sources of information that the technical and business decision makers are using (ISVs, computer manufacturers, magazines, websites, newsletter, user groups and conferences) are not delivering sufficient information to them so they can make a more informed purchasing decision.

In previous studies conducted by us and others, price was never the primary decision factor—performance, reliability, vendor and certification were always ahead of it. With the expansion of the market to the entry level, price has crept up in importance. For the high-end users it is still low on the list.

Workstation Users Run Multiple Workloads

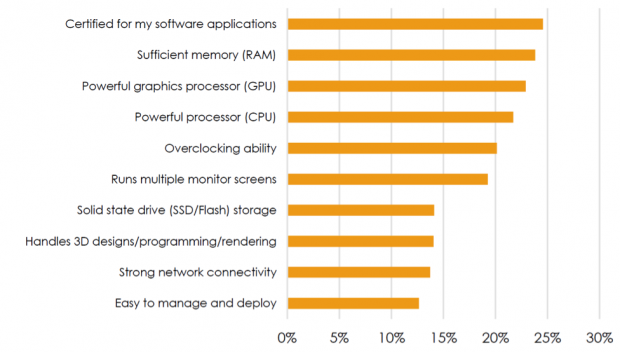

The technical and business decision makers surveyed who configure workstations to run their most critical workloads indicated that sufficient memory, followed by a high-end CPU and AIB are their top workstation purchase priorities. Some respondents reported they have six to seven programs running at once while running multiple workloads.

Fig. 5: Decision tree for technical and business decision makers who recommend, approve, or actually purchase workstations.

Fig. 5: Decision tree for technical and business decision makers who recommend, approve, or actually purchase workstations.For a group who wasn’t certain about the most critical part of the workstation, the ITDMs showed a surprising interest in over-clocking and running multiple monitors. This is especially interesting because over-clocking can run counter to reliability, the hallmark of a workstation. The BDMs’ priorities were multiple screens, sufficient RAM, and certification for their specific software programs. The managers from AEC firms had a higher than average priority for multiple monitors and RAM, while the priorities from manufacturing decision makers included overclocking and 3D capabilities. The managers in the U.S. and China placed a higher priority on CPUs and overclocking than the average respondent.

Importance of CPU and AIB: a Function of the Application

It wasn’t a surprise to learn the respondents said the application determines how a processor impacts productivity. The value of the CPU or AIB in a workstation depends greatly on the workloads and industry.

After I/O, memory and AIB rank high with AEC firms due to the large graphics files that are created and constantly updated. The newest generation of high-end AIBs contain up to 24GB of high-speed local RAM (GDDR5). The main system can house up to 2TB of ECC RAM (DDR4). As astounding as those numbers sound, they aren’t there for show. High-end users need all the local storage they can get because the 3D models are getting larger every day. The dream of all designers is to have the entire model in RAM, so they can move through it as fast as possible.

The CPU is ranked at or near the top of the components for Energy/Oil & Gas, Health/BioTech, Financial Services and M&E due to complex computations, rendering and creating 3D digital files.

Geographically, China ranks CPU as the most important component. In North America and Europe, the I/O and memory are ranked as most critical.

A workstation has to have a crazy amount of high-speed I/O, inside and out. Inside, it has to have dozens of PCIe lines to support graphics AIBs, (a high-end workstation can be equipped with up to four AIBs), high-speed SSD drives, Intel’s new high-speed Optane memory, specialized communications and special I/O subsystems such as high-speed cameras. I/O is, and always has been, a moving target. The demands for more and faster I/O is one of the things that motivates a user to buy a new workstation.

Future Trends: End Users Most Interested in 4K and Augmented Reality (AR)

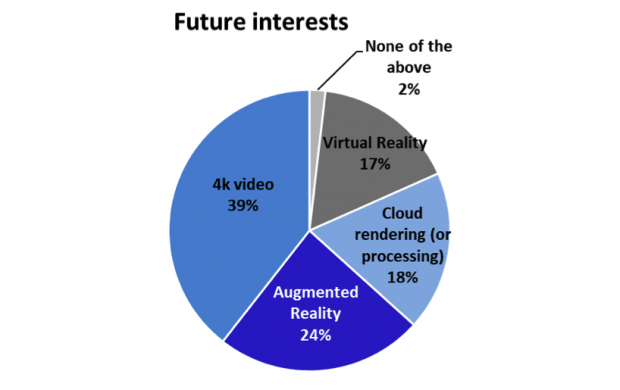

The respondents to the survey indicated they were most interested in 4K and augmented reality for current workloads, and were testing virtual reality. M&E, Manufacturing and Construction expressed the most interest in VR, followed by Energy/Oil & Gas, and Architecture firms who said they are testing VR (from qualitative interviews).

Technical decision makers indicated more interest in VR than average, while business decision makers expressed more interest in cloud rendering/processing.

Fig. 6: The future interests of workstation users.

Fig. 6: The future interests of workstation users.Likewise, M&E, Manufacturing, and Construction firms stated they had more interest in VR than average, while AEC firms were more interested in cloud rendering/ processing than average.

China and North America showed more interest in VR than average, and the U.S. and China are interested in 4K.

While head-mounted displays (HMDs) come to mind when seeing the terms “workstation” and “VR” together, the workstation plays a major role in creating the VR content that users are interacting with via HMDs.

However, VR can be a partial supplement for a CAVE (computer automatic virtual environment), and/or augment a CAVE. A CAVE is a virtual reality system that uses projectors to display images on three or four walls and the floor and/or ceiling.

Fig. 7: An engineer using a VR HMD inside a CAVE. (Join Gray Construction)

Fig. 7: An engineer using a VR HMD inside a CAVE. (Join Gray Construction)By creating a VR walk-through of a proposed facility in the very early stages, manufacturers can engage with equipment suppliers and vendors, which allows them to better plan how operations will be conducted in the facility.

Workstation Workloads in the Cloud

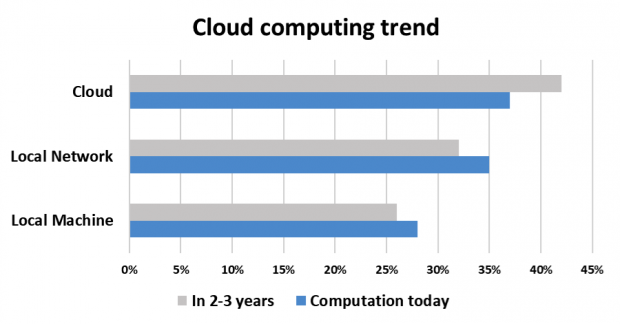

There is a strong progression to the cloud. The respondents indicated they are actively moving both storage and computation to the cloud in the near term. Less regulated firms in the U.S. and UK will see the largest jump to the cloud, while firms already in the cloud have not seen a drop off in workstation CPU needs.

Fig. 8: Technical decision makers see computation moving to the cloud more so than business decision maker

Fig. 8: Technical decision makers see computation moving to the cloud more so than business decision makerAEC, M&E and Manufacturing see more movement to the cloud in both areas than average while Finance and Health/Biotech are more resistant to moving to the cloud. China expects computation to stay more local while the U.S. and UK are more open to the cloud.

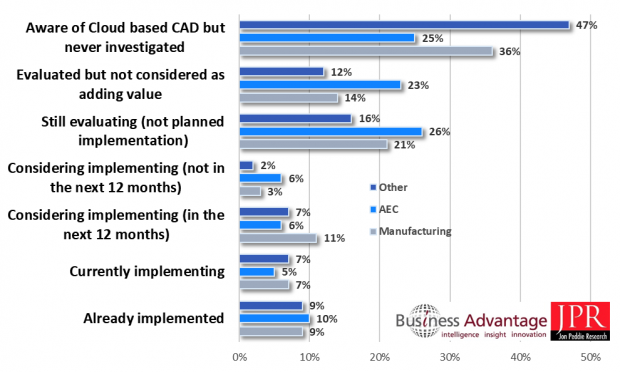

The survey results correlated well with our findings for our CAD in the Cloud study.

There really isn’t one “CAD market,” there are several CAD markets. CAD is such a universal tool; it is used in dozens of markets. CAD usage in one field can look quite different from CAD usage in another field. However, AEC and Manufacturing dominate the use of CAD. Those two segments comprise about 70% of the market, and for the purposes of analyzing the data the rest is categorized as “Other.”

Fig. 9: Companies’ current/planned usage of cloud-based CAD by industry sector.

Fig. 9: Companies’ current/planned usage of cloud-based CAD by industry sector.Similar levels of current and planned implementation are seen across the main industry sectors. There are higher levels of ongoing evaluation in Manufacturing and AEC than “other” sectors, of which almost half have not investigated “CAD in the Cloud” solutions at all.

CAD is not the only engineering application to move to the cloud, finite element analysis (FEA), computational fluid dynamics (CFD) and subterranean geophysical exploration modeling are some other applications that need the distribution and storage capability of the cloud to allow secure collaboration worldwide. It’s a constant tradeoff among local processing and storage, cloud storage and local processing, and cloud storage and processing. Even within a company, on a given project, all three arrangements will be employed. There is no single answer (one size does not fit all). It’s the flexibility that remote computing and storage offer that has helped propel the productivity gains in the face of increases in data set sizes.

Product Introductions and Buy-cycles

Generally speaking, the workstation suppliers are introducing products every two years on average to keep up with expanding workloads and software upgrades. Survey respondents reported that they try to look at the workload software upgrade specifications a year before and plan their refresh around those requirements.

Large companies are moving toward two-year leases to automatically stock the best workstations. For AEC firms with users not involved in rendering, workstations are replaced every three to four years. Manufacturing, M&E and Energy/ Oil & Gas are refreshing faster than average.

In the past, large organizations would use a purchasing agent or IT manager to pick and choose what workstation the engineers would get. Their motivations were different from engineering—IT was looking for stability and communally for ease of maintenance and support, engineering was looking for maximum performance. Typically, the engineers needing maximum performance were the minority and didn’t have a voice in the decision process. Today, that’s reversed. Large and small organizations have learned—with the demands of time to market, product differentiation, traceability and quality control—it’s the engineers who need to be driving the selection of what workstation they use.

According to the survey, Germany and France have longer refresh cycles than other countries. The U.S. and UK have shorter refresh cycles than other countries.

Refresh cycles are different for every organization, usually driven by budget cycles, which are often out of synch with the realities of the market. Accountants and financial planning departments didn’t used to factor in the upgrade schedules of ISVs and hardware suppliers, which left their engineers with outdated workstations and applications. Planning departments have learned to include a fudge factor in their budget to allow for surprises—the ISVs don’t always have predictable or reliable update schedules. A general rule of thumb has been to plan for a refresh of hardware and update of software every two to three years. Longer than that and you find yourself behind the curve compared to competitors, and over time it only gets worse.

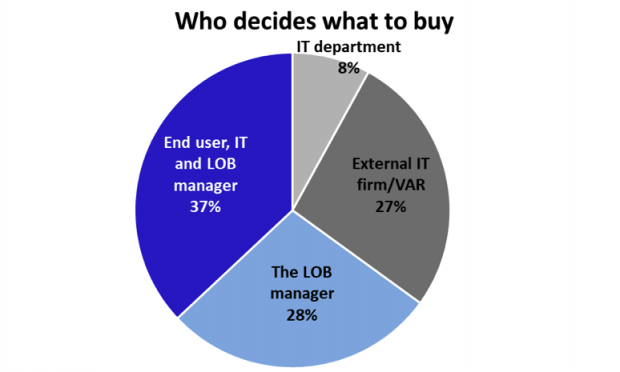

End Users Are Influencing Workstation Purchases

The survey indicated end users are very involved in the workstation purchasing process. The respondents indicated that while IT can make workstation decisions, they almost never make those choices independently. The department manager and end users tell IT what software is being used, and how many hours a day it will be under stress. Once IT figures out the workload and looks at the budget, then they buy the workstation.

Fig. 10: The workload, input from the user(s), and line-of-business (LOB) department managers are key in the purchase process.

Fig. 10: The workload, input from the user(s), and line-of-business (LOB) department managers are key in the purchase process.The technical and business decision makers more often look to outside consultants/value-added resellers (VARs) to help with selecting workstations, and less so their IT department, if they even have one.

North American firms are more inclined to use VARs, while China is significantly less inclined, according to the survey results. The U.S. and UK give more autonomy to the line-of-business buyer, whereas China relies more on IT.

Summary

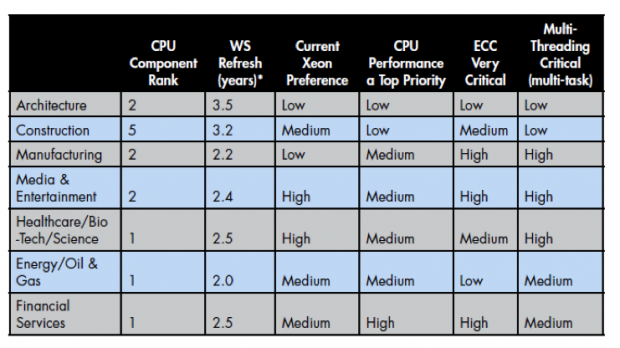

The workstation users who responded to the Intel survey quantified their opinions with regard to several criteria concerning a workstation and its procurement, which is summarized in the following table.

Table: Comparison of application needs for various components in a workstation

Table: Comparison of application needs for various components in a workstationHealth/Bio/Science, Energy/Oil & Gas and M&E are the applications where users expressed the highest CPU needs, faster refreshes and are current users of Xeon processors. Manufacturing firms have fast refreshes (in part due to leases) and a need for ECC and multithreading. AEC firms give lower priorities to CPUs and refreshes.

Geographically, China ranks the CPU as the most important component. While in North America and Europe, the I/O and memory are most critical.

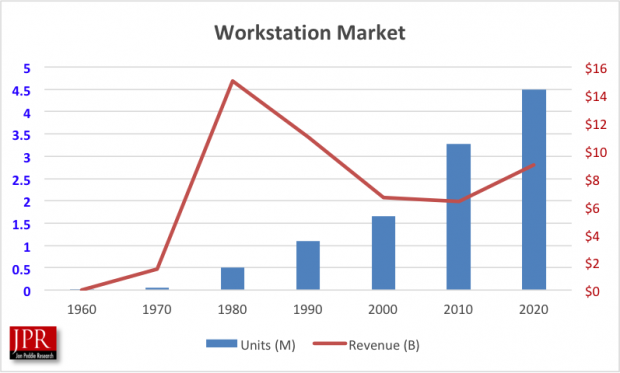

In my book, “The History of Visual Magic in Computers,” I trace the introduction of the workstation to the IBM 1620, a small scientific computer designed to be used interactively by a single person sitting at the console. Introduced in 1959, it was the first integrated workstation—just not a graphics workstation.

Fig. 11: IBM 1620 “CADET” personal scientific computer, circa 1959 (Courtesy of Crazytales (CC BY-SA 3.0))

Fig. 11: IBM 1620 “CADET” personal scientific computer, circa 1959 (Courtesy of Crazytales (CC BY-SA 3.0))Since then, workstations have gotten 10,000 times more powerful, 1,000 times smaller and 1,000 times less expensive. You can get a very powerful laptop workstation weighing less than 4 lbs. today for less than $2,000.

The market has grown from 50 units a year to over 4 million units a year, and even with a declining average selling price due to Moore’s law, the market has shown steady and robust growth in value.

Fig. 12: Workstation market over time.

Fig. 12: Workstation market over time.All the things we enjoy today—air travel, fantastic movies and games, giant skyscrapers, clever consumer products and even our clothes—are, or have been, designed on a workstation. To say we couldn’t live without workstations would be an understatement. But workstations are workhorses and not very sexy, so they don’t get headlines, tweets, or much Facebook time.

Today’s workstations range from devices as small as couple of packs of cigarettes to big boxes and everything in between, including laptops.

The survey captured some of the ideas users have about workstations, and some of their attitudes with regard to buying one (or 100), and if it proved one thing, it was that opinions and needs vary geographically, by applications and industry, and of course budget. There isn’t a workstation market, there are dozens of workstation markets.

More Info

Jon Peddie is president of Jon Peddie Research. Contact him via jonpeddie.com.

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News