June 1, 2018

Energy is the economic foundation of modern civilization. Competitive pressure to develop new energy resources and maximize the potential of existing resources is as strong as ever. Only a few years ago, the oil and gas exploration industry was described with phrases like “decreasing supplies” and “ever-increasing energy prices.” Then a series of technical innovations—made possible in part by high performance computing (HPC)—turned the industry upside down. The U.S. became a net exporter of oil, and all oil producing countries took advantage of new technology to discover new fields and new resources in existing fields.

Despite the turnaround in oil and gas, all forms of energy exploration and development remain highly competitive. In oil and gas, some new sites are already showing diminishing returns. Renewable energy generation methods are coming under increased pressure to be more sensitive to local issues and yet increase their output. Political, cultural and environmental considerations must continue to be balanced with the economic necessity of abundant energy. Exploration, extraction and generation must all maintain peak efficiency. One key to this is advancing the use of simulation technology throughout the energy production cycle. The speed, depth and cost of simulation remains a critical success factor.

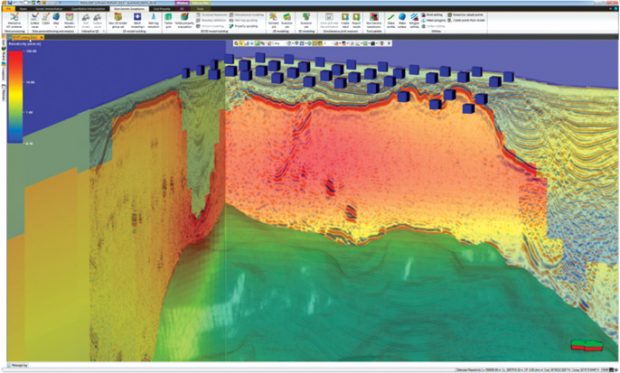

A 3D inversion model overlain on a seismic cube for integrated interpretation of horizons. Image: Schlumberger Omega

A 3D inversion model overlain on a seismic cube for integrated interpretation of horizons. Image: Schlumberger Omega

The energy industry has been at the forefront of HPC since the use of clustered servers and workstations for engineering existed. Today, the energy sector continues to push the envelope for advanced simulation. From oil and gas companies simulating subsea conditions in water depths of more than 2,000 meters—where most of the world’s remaining oil and gas resources lie—to modeling the energy needs of smart cities to bolster sustainability efforts, to predicting global wind patterns to inform wind farm designs, and more.

Demanding Software Suites

The Schlumberger Omega geophysical data processing platform is an example of the state-of-the art in energy simulation software. Schlumberger and its competitors can no longer only sell point solutions as software products, they must sell suites that provide results for a wide range of technical problems. These suites combine the latest science in seismic data analysis with scalable processing and extensible software. The Omega platform, in particular, combines seismic electromagnetic, microseismic and vertical seismic profile data. Such an integrated approach makes it easier to combine various data and expertise sources. Other modules can be added to turn analysis views into editable models with the latest imagery features.

Such software tools are demanding of their computing platforms, says Ed Turkel, HPC strategist for Dell EMC. “The increased need for high-resolution substrata imagery means more computation. This is the heaviest use of HPC in industrial applications.” Once a field is analyzed and visualized, the data then becomes a design model for directing onsite operations. “The question becomes, how to get every drop,” he says.

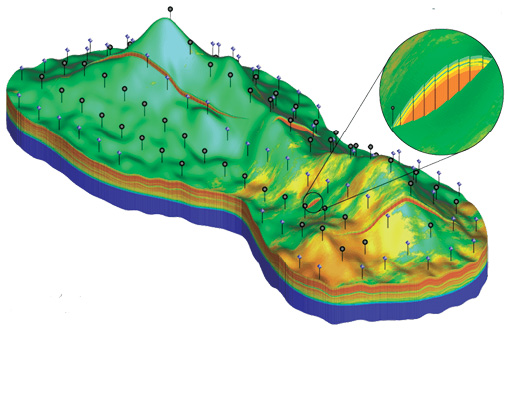

Echelon is a high performance petroleum reservoir simulator optimized for HPC clusters and high-end accelerators. Image: Stone Ridge Technology

Echelon is a high performance petroleum reservoir simulator optimized for HPC clusters and high-end accelerators. Image: Stone Ridge Technology

One example of HPC-powered simulation in the energy sector is fracking, a hotly debated method for opening up new paths to oil or gas. Fracking is only possible with a detailed visualization of substrata. “All this technology is absolutely required to maintain production levels,” adds Turkel.

Schlumberger estimates HPC clusters run their Omega-based simulations 1,000 times faster than a single local workstation. Depth-point inversions are available in 15 seconds instead of 4-5 minutes.

HPC clusters can take advantage of parallel processing on a vast scale. In 2017, ExxonMobil completed a study of optimizing predictions in reservoir performance using parallel computing. ExxonMobil worked with the National Center for Supercomputing Applications, but the successful test is an indication of what is coming to the larger market. The test used 716,800 processors operating in parallel. It was the largest number of processors used in oil and gas, and one of the largest simulations reported for any industry segment.

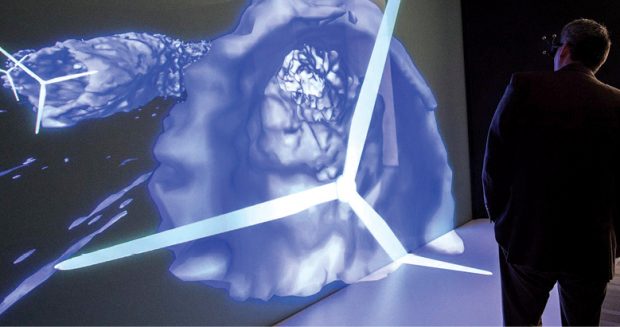

The National Renewable Energy Laboratory Computational Science Center provides software, services, and time on its HPC cluster for wind energy research. Image: NREL

The National Renewable Energy Laboratory Computational Science Center provides software, services, and time on its HPC cluster for wind energy research. Image: NREL

Seismic analysis provides detailed imagery that a geologist or geophysicist must analyze. Several vendors are working on new software that takes a machine learning approach to this task, says Turkel. “Oil companies have enormous volumes of data, including old tape silos,” he says. The day is not far off when machine learning routines will reprocess old data and recommend what should be referred to a human for detailed analysis.

Visualize the Wind

The design of wind turbines is a traditional mechanical engineering challenge, using modern simulation software to explore weight, strength, shape and materials. The location of wind turbines requires climate modeling and weather simulation, a more challenging task. The combination of an anchored device that moves with the wind means torques and load can change rapidly. Some wind turbines are placed offshore, which adds ocean dynamic to the complexity.

HPC and simulation is used, not only in the design of wind turbines, but to determine the best placement for them. Image: Thinkstock/Mimadeo

HPC and simulation is used, not only in the design of wind turbines, but to determine the best placement for them. Image: Thinkstock/Mimadeo

Researchers at National Renewable Energy Laboratory in Golden, Colorado have developed a variety of computer modeling and software simulation tools to support the wind industry with state-of-the-art design and analysis capabilities, all optimized for HPC use. In February, the NREL Computational Science Center launched a new website to make its research on HPC for energy-related systems more readily available. NREL also makes its in-house HPC system available for research teams.

More Info

Subscribe to our FREE magazine, FREE email newsletters or both!

About the Author

Randall S. Newton is principal analyst at Consilia Vektor, covering engineering technology. He has been part of the computer graphics industry in a variety of roles since 1985.

Follow DE