What’s Happening to Cluster Computing?

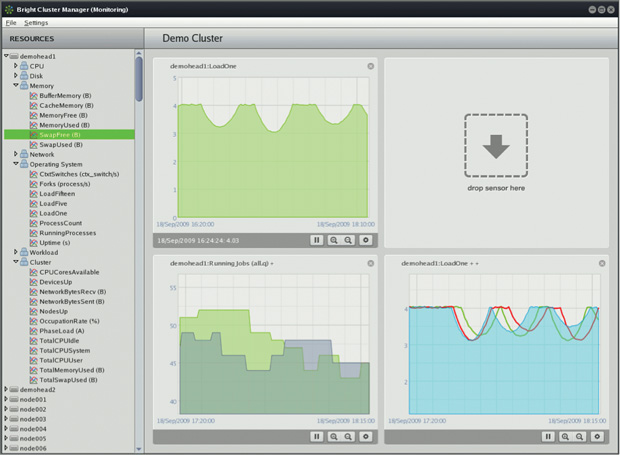

Bright Computing reduces cluster management—including extending the cluster onto a cloud infrastructure—to a desktop dashboard. Image courtesy of Bright Computing.

November 1, 2016

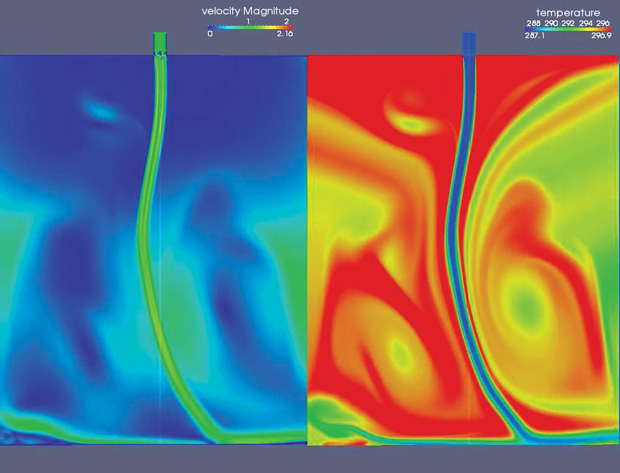

Biscari Consultoria tested UberCloud for running a highly coupled computational fluid dynamics simulation using Amazon Web Services as an alternative to extending an existing in-house cluster. Image courtesy of UberCloud.

Biscari Consultoria tested UberCloud for running a highly coupled computational fluid dynamics simulation using Amazon Web Services as an alternative to extending an existing in-house cluster. Image courtesy of UberCloud.In 1980, a high-end computer for demanding engineering projects could be described by the three Ms: 1 million instructions per second, 1 million bytes of memory and 1 million pixels for graphics. In 2016, the average smartphone surpasses those numbers by wide margins. The computing power we once saw as high-end is now a commodity.

In recent years, engineering firms have maximized their ever-increasing need for computational performance by using cluster technology that links together multiple servers to work as one logical unit for high-performance applications including simulation and analysis. Advances in software services like virtualization and server grids make it easier for engineering companies to expand their existing clusters to serve growing internal demand. Prices dropped and power increased for years. Many computers known as supercomputers today are just very large clusters.

What we call cloud computing today is rentable time on a massive array of servers, available on demand from any location. Cloud technology is moving to the forefront, offering computing as a utility, not an infrastructure investment. This new wave of computing raises new questions: What happens to on-site cluster computing? Is the democratization of local resources ending with the move to off-site cloud? What happens to existing high-performance computing (HPC) installations?

Democratized HPC as the New Normal

The evolution of computer power is a story of standardization, not differentiation. From proprietary minicomputers to RISC/Unix workstations to x06 PCs, the price/performance ratio continues to favor commodity products. When hardware commoditizes, software follows with standardization. Today’s web is built on an open-source software stack of Linux, Apache, MySQL and PHP. A similar stack is evolving for using clusters in HPC using the new OpenHPC, an open source software project seeking to assemble an integrated collection of HPC-centric software/middleware components. Combined with efforts from leading HPC hardware and services vendors like Dell EMC, HP Enterprise and IBM, the day is getting closer when the average enterprise or the typical engineering department will consider supercomputer capabilities as typical as running a web server or a PLM (product lifecycle management) installation is today.

Bright Computing reduces cluster management—including extending the cluster onto a cloud infrastructure—to a desktop dashboard. Image courtesy of Bright Computing.

Bright Computing reduces cluster management—including extending the cluster onto a cloud infrastructure—to a desktop dashboard. Image courtesy of Bright Computing.But where will those supercomputer capabilities reside? On-premise as an upgraded cluster, or off-premise in a cloud? The answer: “All of the above and more.” Software developers are coming up with technologies to repurpose existing clusters, help on-site clusters work in a cooperative fashion with off-site cloud services and provide the key capabilities of cloud in a private setting. The same scalability and accessibility can be provided on a smaller scale by existing clusters.

Containers for Shipping Software Packages

UberCloud is a company with a big mission: To democratize the HPC experience so that clusters, grids and clouds all become one happy computational family. Their business model is to combine elements of the open-source community ethic of shared resources and open development, with a commercial set of features to create what they call “computing-as-a-service.”

UberCloud delivers engineering services ready to execute out of the box using what they call container technology. The container metaphor draws on how standardized containers revolutionized the intercontinental shipping industry in ’80s. The containers are available for many of the most popular engineering products, including several ANSYS products; Siemens PLM Software’s CD-adapco Star-CCM+ and Red Cedar HEEDS; COMSOL Multiphysics; Nice DCV; Dassault Systemès SIMULIA Abaqus; Numeca FINE/Marine and FINE/Turbo; OpenFOAM and others. Customers are using UberCloud containers to run these products on cloud resources from Advania, Amazon, CPU 24/7, Microsoft Azure, Nephoscale and OzenCloud.

UberCloud says its container approach eliminates the need for a hypervisor to manage virtual machines. They also claim near-bare-metal results for engineering applications. “When we came across UberCloud’s new application container technology and containerized all our CFD software packages, we were surprised about the ease of use and access to any computing system on demand,” says Charles Hirsch, founder of Numeca.

Any service where multiple CPUs and servers are running as a unit looks like a cloud to UberCloud.

Simplifying Clusters

“Clustering is hard,” says Bill Wagner, CEO of Bright Computing. “Talk to anyone who has done it. It is difficult to build a cluster; it is difficult to manage it over time; it is difficult to monitor it.” Bright Computing brings a dashboard approach to cluster deployment and management, and claims their software can take a client from bare metal to deployment in under an hour.

One advantage Bright Computing brings to managing clusters is the ability to “cloud burst” any application running in the Bright environment. This dynamically extends the use of CPUs beyond the premises to either a private or public cloud, as needed.

The Institute of Aircraft Design at the University of Stuttgart is a Bright Computing customer. Routine IT requirements are handled by university IT, but the institute runs its own cluster for research. Its most demanding simulations were taking weeks to run. When the open-source cluster manager OSCAR failed to work with upgraded cluster hardware, the institute tested Bright Cluster Manager. “Upgrades that used to take us weeks to complete can now be done in a few days,” says Alexander Schon, a member of the institute’s technical staff. “It is very user friendly and greatly reduces the time necessary for managing a cluster.”

OpenStack is an open-source framework for turning clusters into private clouds, but no one will tell you it is easy to use. Bright Computing has a special edition of Cluster Manager fine-tuned for the OpenStack environment. It is part of Bright Computing’s cluster-as-a-service initiative, which now offers a management framework for HPC and Hadoop systems as well as OpenStack deployments. When a temporary extension to one of these environments is required for a project, Cluster Manager can open up new cluster resources as the desired system. The result: A cloud-like extended deployment behind the firewall or beyond into a public cloud setting as required.

Building Commodity Supercomputers

Hardware vendors are still selling servers for deployment in clusters, but the focus has changed from the CPU to the total infrastructure. Dell EMC and HP Enterprise both offer a variety of services for cluster deployment and management, and have partnerships with the software vendors already mentioned here.

The goal of all these efforts is commodity supercomputing. Engineering companies are not ready to toss out their existing infrastructure for cloud computing without practical results. Empowering existing cluster resources with easier deployment, greater ease of use and the ability to extend onto a public or private cloud as needed gives new life to clusters. Cluster commoditization via software innovation does not eliminate the need for clusters, but instead increases their utility for engineering.

This new emphasis on ubiquitous computing resources achieved through software makes it easier to adjust to changes. UberCloud, Bright Computing and others are making every computer in reach a node on the existing cluster network, up to and including the thousands of CPUs available on demand from public cloud services.

More Info

Subscribe to our FREE magazine, FREE email newsletters or both!

About the Author

Randall S. Newton is principal analyst at Consilia Vektor, covering engineering technology. He has been part of the computer graphics industry in a variety of roles since 1985.

Follow DE